Everything you should know about confidential computing

We encrypt data in storage and over the network, but what happens when we actually process it? Mostly, it’s sitting in memory, quite exposed.

A new frontier in data security is becoming production-ready. It’s called confidential computing, and it’s jumped straight up my list of “exciting boring technologies”.

In this post, we’ll explore confidential computing - what it is, how it works and if it’s worth your time.

TL;DR

Confidential computing closes a major security gap by protecting data while it’s in-use. With hardware-based “trusted execution environments”, it seals applications in a “black box” that is inaccessible to the host system or cloud provider. This enables verifiably private processing of sensitive data.

The technology is now practical: historical barriers of performance, cost and availability have largely been overcome with modern approaches. This makes it a strategic imperative for anyone handling regulated or highly sensitive data. For everyone else, it positions confidential computing as a future secure-by-default foundation of the cloud, making it a technology worth understanding today.

In the near future, “confidential computing” will just be “computing.”

Mark Russinovich, CTO at Azure

The case for confidential computing

All modern businesses process sensitive data. Personal, financial, trade secrets. Keeping that stuff safe is pretty important, and one of the ways we do that is by using encryption.

Traditionally, we protect data:

In-transit: where we encrypt it as it travels from one system to another.

At-rest: where we encrypt it before we store it.

These measures help prevent unauthorized access through man-in-the-middle attacks, data breaches and physical theft. But when that data is decrypted to be processed, it’s exposed to anyone with insider access. The security of your application alone isn’t enough: vulnerabilities in the underlying operating system or hypervisor is part of the attack surface, too.

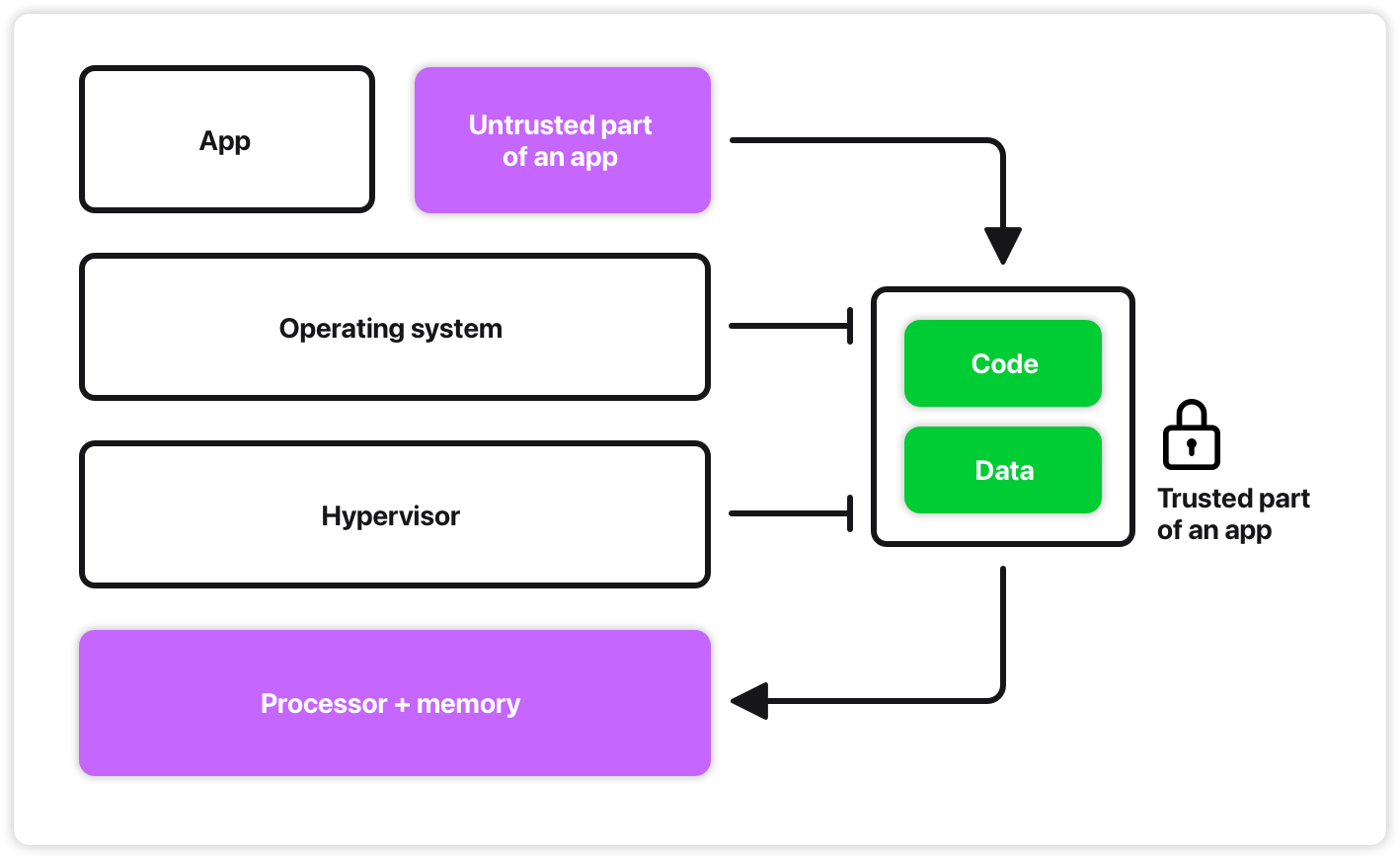

Confidential computing is a hardware-based technology that protects data and code in-use. It achieves this by having the processor create and enforce a dedicated processing environment, isolating your app from the host [1].

This solves big problems of trust. If an app is truly isolated, you don’t need to trust the operating system, the administrator, or your cloud provider. Just the hardware vendor. I’ve written previously on the risks of hosting EU data with US public cloud providers and this kind of technology can mitigate some of those risks. As it happens, the US tech industry is pushing pretty hard for confidential computing these days [2].

There’s more to it, but let’s get some context first.

Privacy-preserving computation

The core idea isn’t new. It stretches all the way back to the late 70’s with Rivest, Adleman, and Dertouzos work on “private homomorphism” [3].

In the 90’s, the idea of “Trusted Computing” started to take hold. This popularised the concept of the “Trusted Platform Module”, which made its way into consumer technology in the 00’s, enabling use cases like disk encryption [4]. As of Windows 11, Microsoft requires it [5].

The next big thing is the invention of “Fully Homomorphic Encryption” in 2009, which enables computation on encrypted data [6]. That’s a huge leap forward (!), but also extremely slow [7].

At this point, practical hardware-based security solutions pick up in popularity. Apple introduces its “Secure Enclave” in 2013 [8] and in 2015, Intel launches “Software Guard Extensions” (SGX), designed for secure remote computation and DRM [9]. This causes a growth spurt in practical use cases for confidential computing, leading to the formation of the Confidential Computing Consortium (CCC) under the Linux Foundation in 2019 [10].

Fast-forward to now.

In hardware, the big dogs are in: AMD SEV-SNP, Arm CCA, Intel SGX + TDX, and NVIDIA Confidential Computing.

In open source, we have projects like Contrast for Kubernetes, OpenHCL for VMs, and ManaTEEfor data analysis.

The service providers are in, too. Azure has built a decent offering in confidential computing, like VMs, containers and managed services. European STACKIT provides confidential Kubernetes and VMs. We also have smaller vendors like Edgeless Systems with focused products like Privatemode AI.

How it works

There’s no doubt that the momentum is building for confidential computing. The real appeal is that it pushes trust down the stack: from trusting the software environment to trusting the computing hardware. That opens for new possibilities, like “data clean rooms”, privacy-preserving AI and bringing more sensitive workloads in the cloud.

But how does confidential computing establish that trust?

Trusted execution environments

It all begins with secure hardware. This is our “root of trust”. This, along with code designed to run within it, constitutes a “Trusted Execution Environment” (TEE).

Let’s take Intel SGX as an example:

An SGX-enabled processor (like Intel Xeon 6 [12]) reserves a region of main memory. This is where the processor will host “enclaves” which we can think of as “secure containers” for code and data.

When we create an enclave, our initial program is loaded from unprotected memory into enclave memory, passing through an encryption module using a key known only to the hardware itself.

When processor loads that data into its cache, it passes it through the same module to decrypt it.

When it writes data back, that is also encrypted. So on, and so forth.

The programmer of the enclaved process decides what is ultimately committed to unprotected memory: some aggregate plaintext result, something encrypted or nothing at all [13].

The data is only ever plaintext inside the physical chip. Everything outside that boundary, including main memory, is encrypted. This creates a hardware-enforced black box that isolates the enclave from the host system.

While SGX pioneered this approach, newer technologies like Intel TDX and AMD SEV-SNP apply the same ideas of hardware-enforced memory encryption to entire virtual machines and containers, making it easier to adopt for existing apps.

Remote attestation

If you have the required hardware, you can compute confidentially. Provided you trust the manufacturer to have designed it properly. But how can you know the hardware is legit? That the vendor didn’t swap it? Or the host isn’t lying to you?

If we’re thinking in principles of “zero-trust”, a cloud operator can’t be implicitly trusted. “Trust me bro, I’ve got TEEs” isn’t enough. We need assurances that our code and data is secure [14].

That’s where attestation comes in. Attestation is the process that lets the hardware prove that it’s a geniuine TEE running your software. Think of it like certification. Here’s how it works:

When an enclave is created, the processor fingerprints its initial code and data. This is called a measurement. Any change to the code will result in a different measurement.

The processor creates a document called a quote (or report) that contains the measurement and maybe other data you will want to verify.

The report is signed with a private key that was embedded in the processor at the factory. It’s like the processor’s identity.

Your remote system receives the signed report. It verifies the signature using public certificates from the hardware vendor. Then, it checks that the measurement inside the report matches the fingerprint of the software it expected to run [15].

If these checks succeed, you have strong evidence that you’re interacting with your own, unmodified software inside a genuine trusted execution environment.

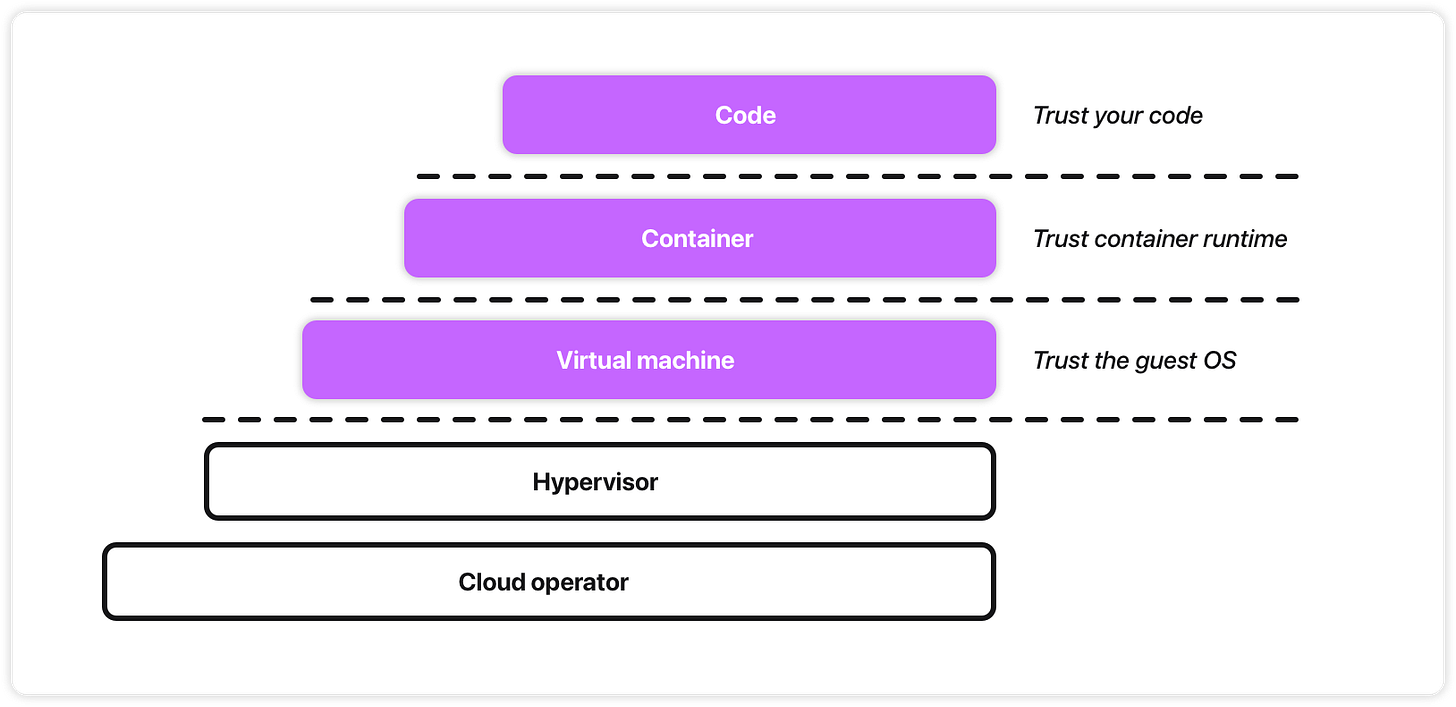

Levels of isolation

Confidential computing isn’t one single thing - it’s a variety of technologies and solutions with different approaches and tradeoffs.

One of the central factors is how different technologies approach memory isolation. We have 3 primary models [16].

Code

This is the isolation model introduced by Intel SGX. It wraps individual applications or even specific functions in a secure enclave. This offers great protection, as the “trusted computing base” is only your application code. But it’s not plug-and-play.

Virtual Machine

Intel TDX and AMD SEV-SNP focus on isolating an entire VM, protecting its memory from the host hypervisor. This model works for “lift-and-shift” migrations of existing workloads to a confidential environment, no code changes needed. The trade-off is a larger “trusted computing base” because you must trust the guest OS inside the VM.

Container

Container-level isolation builds on the hardware features of VM isolation (like TDX and SEV-SNP) to create a solution that works for cloud-native setups. Instead of running a full OS, it uses a minimal, specialized runtime to launch the container within the hardware-protected memory boundary.

This approach is easier to adopt than code-level enclaves (no code changes needed) but offers a smaller attack surface than a full confidential VM, since you don’t need to trust an entire guest OS.

Downsides

Okay, if confidential computing is so great, why isn’t it everywhere yet? Where’s that hype train?

Security

While confidential computing raises the bar for security, it’s not invincible.

Researchers have demonstrated physical side-channel attacks. A recent example is TEE.Fail, where a custom hardware device physically intercepts memory traffic to extract secrets [17]. Other attacks have successfully inferred data by looking at CPU power consumption patterns [18].

This shows that even with memory encryption, the physical hardware is still an attack vector.

Availability

The big players are in on confidential computing, but the hardware isn’t exactly everywhere.

Not all cloud providers offer confidential computing, and those that do, offer it for a subset of machines and services.

At this time, Microsoft Azure probably has the most expansive catalog.

Performance

Memory encryption isn’t free.

For code-level isolation with Intel SGX, the overhead can be significant. For some operations, enclaved code can be >5x slower than native [19].

But when we look at VM/container-level isolation, the performance is much better. Just a few percentage points of overhead compared to equivalent unprotected cloud setups [20].

A 5x slowdown is a dealbreaker for most, but the minimal overhead of newer approaches is a far more acceptable compromise for the security it provides.

Costs

With the performance overhead described above, we can expect a slightly higher baseline cost per unit of work. Additionally, cloud providers tend to price confidential instances at a premium. This varies between vendors.

We also need to factor in, that development for confidential computing can be more complex. If we make use of code-level isolation, we’ll require special SDKs and a different way of thinking about app architecture.

At this time, I would expect to spend 10-20% more on confidential workloads, depending on how you host.

Is it worth your time?

It depends on what you’re building. You should actively explore it today if you:

Handle highly sensitive data:

If you’re working with medical records, financial data, proprietary AI models, the extra layer of hardware-enforced protection could be a big win.

Need to collaborate on private data:

If you need to involve multiple parties to analyse a shared dataset without revealing raw data between parties, confidential computing enables this with “data clean rooms” and collaborative AI training.

Are subject to strict data regulation:

If you’re navigating complex regulation like GDPR/CCPA/HIPAA/PCI DSS, confidential computing lets you do more while staying compliant. It helps you prove that not even a cloud provider can access your data.

Want to build true zero-trust architecture:

If your goal is to minimize trust in your infrastructure and provider, confidential computing is the next frontier. It allows you to “assume breach” of the host environment and still protect your workload.

For everyone else...

The benefit will probably arrive silently. As the technology becomes more widespread and costs decrease, it is on a solid trajectory to become the default, secure foundation for the cloud.

And while “encrypting data in-use” is a good shorthand, the real innovation is the practical approach - a hardware-sealed black box, where data is only ever plaintext for the briefest of moments inside the box itself.

This is the idea that finally allows us to protect data through its entire lifecycle: at-rest, in-transit, and now, in-use. It might not be long before, as Russinovich said, “confidential computing” will just be “computing”.

Daniel Rothmann runs 42futures, where he helps technical leaders validate high-stakes technical decisions through structured software pilots.

Sources

[1] https://www.linuxfoundation.org/hubfs/Research%20Reports/TheCaseforConfidentialComputing_071124.pdf

[3] https://luca-giuzzi.unibs.it/corsi/Support/papers-cryptography/RAD78.pdf

[4] Trusted Computing by Denggue Feng, Yu Qin, Xiaobo Chu, Shijun Zhao, 2017

[6] https://en.wikipedia.org/wiki/Homomorphic_encryption

[7] https://arxiv.org/pdf/2501.18371

[8] https://eclecticlight.co/2025/08/30/a-brief-history-of-the-secure-enclave/

[9] https://en.wikipedia.org/wiki/Software_Guard_Extensions

[11] https://dualitytech.com/blog/data-clean-room/

[13] https://eprint.iacr.org/2016/086.pdf

[14] Zero Trust Networks by Razi Rais, Christina Morillo, Evan Gilman, Doug Barth, 2024

[15] https://edera.dev/stories/remote-attestation-in-confidential-computing-explained

[16] Azure Confidential Computing and Zero Trust by Razi Rais, Jeff Birnbaum, Graham Bury, Vikas Bhatia, 2023

[17] https://thehackernews.com/2025/10/new-teefail-side-channel-attack.html

[18] https://arxiv.org/pdf/2405.06242v1

[19] https://www.ibr.cs.tu-bs.de/users/weichbr/papers/middleware2018.pdf